I am a student working through the book as an independent study with a professor of mathematical logic. We are trying to understand the clock cycles which are at the heart of the memory gates in chapter 3. We both lack a background in computer engineering and architecture. Below is our abstract analysis of the bit gate and we would like to know whether we are correct:

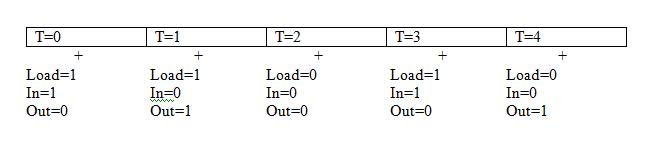

Note: Each clock cycle is an interval and is depicted by a box above, + indicates the tick

In the first run(T=0 @ tick), where we have (Load=1 & In=1), these inputs are not considered yet and out = 0. On T=0 @ tock, out =1 because load & in = 1, T is now equal to 1. When T=1 is @ tick and Load = 1, In=0, Out = 1 (in(T-1)), but when T=1 is @ tock, then the Load=1 and In=0 of T=1 @ tick is considered and Out = 0 and T=2. When T=2 @ tick and Load=0 and In=0, Out is still the same as In(1). When T=2 is @ tock then Out is actually Out(T-1) which is Out(2) because T is now equal to 3, the beginning of the next clock cycle.

Does this mean that when T is at tock, it is updated to T+1 first so that Out can equal Out(T-1) or In(T-1), before the In and Load bits are even considered?

Thanks in advance, your feedback is truly appreciated,

elshae